REST alternative

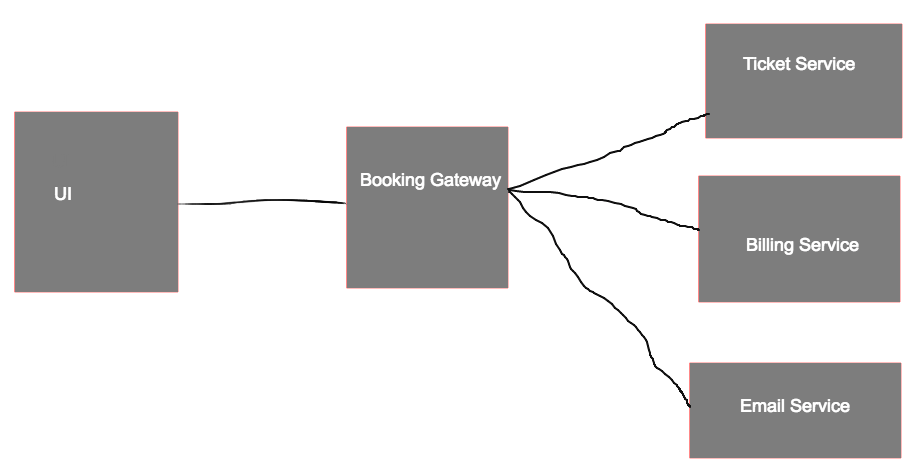

Here’s an example of a distributed system using REST for inter-service communication:

- A user has come to an online travel booking platform and purchased some airline tickets

- the UI communicates user actions to a

Booking Gatewaybackend service - The UI puts a loading spinner on the screen until

Booking Gatewayresponds - meanwhile, the

Booking Gatewayservice sends a httpPOSTrequest to theTicket Serviceto confirm the tickets are available Booking Gatewaywaits for a response fromTicket Service- Once a response is received,

Booking Gatewaythen makes aPOSTrequest to theBilling Serviceto charge the card Booking Gatewaywaits for a response fromBilling Service- Once a response is received,

Booking Gatewayfinally updates the UI which removes the spinner and prints a confirmation. Booking Gatewayalso sends a HTTP request to theEmail Serviceto send user a confirmation

please forgive the amateur diagram - I’m an engineer not an architect

–

Here are the key points that make this RESTful:

- the services are using synchronous HTTP request/response communication

- services own their own “resources”, aka business objects, and they take requests to modify those object. (i.e. create a new credit card charge etc.)

The obvious issue to me here is that one link can break the chain. Say for example the tickets are available, but the Billing Service is already overwhelmed with requests and a 503 Unavailable response is received. What happens to the user experience?

There are many way to handle this. One is to implement an alternative protocol for service communication using 1-way, Asynchronous messaging similar to the one seen in my Mesos example a few weeks back.

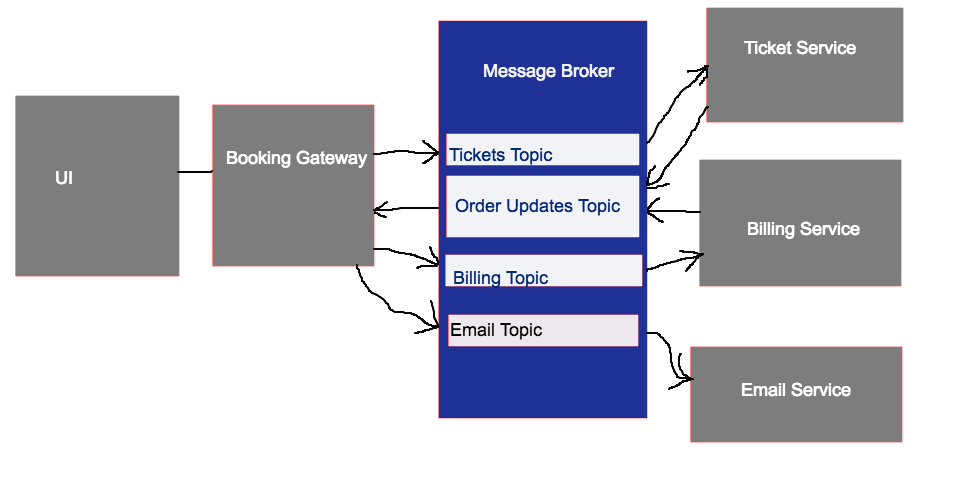

In this new approach, services communicate thru a message broker, such as Kafka, instead of direct HTTP calls. Here’s how it might look:

- A user has come to an online travel booking platform and purchased some airline tickets

- the UI communicates user actions to a

Booking Gatewayservice Booking gatewayresponds immediately to the UI request and creates transaction (with a UUID) and puts it into a “pending” state- User is informed their transaction is pending - no loading spinner needed

Booking gatewaydrops a message into theTicketstopic about this orderTicketstopic is consumed byTicket ServiceTicket Serviceprocesses the request and drops a response in theOrder UpdatestopicBooking Gatewayconsumes theOrder Updatestopic- Once

Booking Gatewaygets an update on the transaction, it changes the internal state for the order and sends a new message to theBillingtopic - Meanwhile, the UI can poll the

Booking Gatewayand find out that its order has changed state Billing Serviceconsumes fromBillingtopic, processes the message and drops an update in theOrder Updatestopic when finishedBooking Gatewayservice consumes the message, updates the order state and sends a message to theEmailtopic

holy shit that is a crappy diagram - don’t quit your day job

–

In this new pattern, we have Actors sending Messages to topics and then moving on to their next order of business. There’s no waiting around for a response, or breaking when another service is down (unless the messaging broker system is down…that’s a whole another situation)

The nice thing about this model is that messages are not lost in the HTTP abyss if a service call fails…messages live in topics and consumers can come back online and continue reading them, never missing a message. This means the state of the system will “eventually” be correct. Additionally the state of the system is easy to reason about by checking expected state against actual state using consumer offsets for example.

–

Diagrams made on https://www.youidraw.com/